ccr_rock

ROCK: Causal Inference Principles for Reasoning about Commonsense Causality

ROCK: Causal Inference Principles for Reasoning about Commonsense Causality

This repo contains official code for the ICML 2022 paper ROCK: Causal Inference Principles for Reasoning about Commonsense Causality by Jiayao Zhang, Hongming Zhang, Weijie J. Su, and Dan Roth.

Abstract

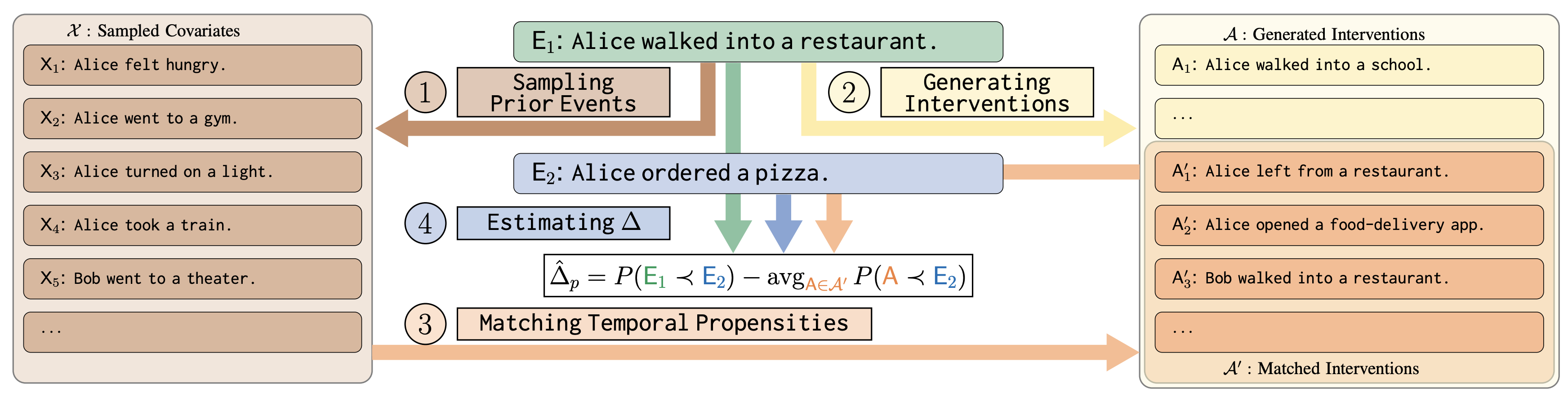

Commonsense causality reasoning (CCR) aims at identifying plausible causes and effects in natural language descriptions that are deemed reasonable by an average person. Although being of great academic and practical interest, this problem is still shadowed by the lack of a well-posed theoretical framework; existing work usually relies on deep language models wholeheartedly, and is potentially susceptible to confounding co-occurrences. Motivated by classical causal principles, we articulate the central question of CCR and draw parallels between human subjects in observational studies and natural languages to adopt CCR to the potential-outcomes framework, which is the first such attempt for commonsense tasks. We propose a novel framework, ROCK, to Reason O(A)bout Commonsense K(C)ausality, which utilizes temporal signals as incidental supervision, and balances confounding effects using temporal propensities that are analogous to propensity scores. The ROCK implementation is modular and zero-shot, and demonstrates good CCR capabilities on various datasets.

Datasets

Three datasets are used:

Models

The ROCK framework contains four component and each of them can be customized. Below listed are the three that make use of language models and the default choices.

- Event Sampler: gpt-j-6B

- Intervention Generator: PolyJuice

- Temporal Predictor: RoBERTa fine-tuned on NYT

Reproducing Experiments

Dependencies

We use Python 3.8 and pytorch for training neural nets, please use

pip install -r requirements.txt (potentially in

a virtual environment) to install dependencies.

Notebook Overview

result_presentation.ipynb: use this notebook to reproduce all figures and tables in the paper.causal_reasoner.ipynb: use this notebook to estimate $\hat{\Delta}_p$. Instructions on extending ROCK is also included there.nyt_finetune.ipynb: use this notebook for temporality fine-tuning using NYT corpus.

Pre-Computed Results

Some operations are computationally heavy (e.g., GPT-J model requires 25GB memory), you can download our pre-computed results using anonymous Dropbox links below:

exp_data.zip(155M): This is used by theresult_presentation; thecausal_reasonercan generate some of the processed data in this archive.roberta_ft.tar.gz(1.29G): This is the fine-tuned RoBERTa model we used in our paper as the temporality predictor. This can be generated fromnyt_finetunenotebook and is used bycausal_reasonernotebook.nyt_ft.zip(9M): This is the fine-tuning dataset we used, obtained from SRL on the original NYT corpus.Code Structure

After installing the dependencies and download all components, the repo structure should look as:

.

├── LICENSE # code license

├── README.md # this file

├── causal_reasoner.ipynb

├── nyt_finetune.ipynb

├── result_presentation.ipynb

├── models

│ └── roberta_ft

│ └── ... (omitted)

├── exp_data

│ ├── acc_N_res.csv

│ ├── acc_noft_res_full.csv

│ ├── acc_res_full.csv

│ ├── copa_dev.json

│ ├── copa_dev_probs.csv

│ ├── copa_dev_probs_noft.csv

│ ├── copa_test.json

│ ├── copa_test_probs.csv

│ ├── copa_test_probs_noft.csv

│ ├── glucose_d1_probs.csv

│ ├── glucose_d1_probs_noft.csv

│ └── nyt_fine_tune.csv

└── src

├── metric_utils.py

├── metrics.py

├── pipeline.py

├── plotter.py

├── train_finetune.py

└── utils.py

Reference

@inproceedings{ZZSR22,

author = {Jiayao Zhang and Hongming Zhang and Weijie J. Su and Dan Roth},

title = ,

booktitle = {Proc. of the International Conference on Machine Learning (ICML)},

year = {2022},

url = "https://cogcomp.seas.upenn.edu/papers/ZZSR22.pdf",

}